ETHERCAT WAIT SYNC

- papagno-source

- Offline

- Premium Member

-

Less

More

- Posts: 113

- Thank you received: 7

04 Apr 2025 10:34 - 04 Apr 2025 11:13 #325669

by papagno-source

ETHERCAT WAIT SYNC was created by papagno-source

Hi at all.

I use normally Debian 10 with ethercat and not have problem.

Now stay testing devian 12 (iso by git linuxcnc with uspace) and Procedure CORBETT ethercat installation

at point 3 and point 4.

When start configuration the value the drive and I/O are refreshed after 10 second after start.

On Dmesg have :ETHERCAT WARNING 0-0 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-1 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-2 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-3 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-4 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-5 SLAVE DID NOT SYNC AFTER 5000 MS

AFTER THIS TIME THE REFRESH THE VALUE ARE FAST AND NORMAL OPERATION, BUT NOT UNDERSTAND WHY AT START THE LCNC HAVE THIS WAIT FOR READ DRIVE VALUE.

THE SAME CONFIGURATION WITH DEBIAN 10 AND ETHERCAT 1.5.2 AND LCNC 2.9.0-PRE0 RUN OK

I use normally Debian 10 with ethercat and not have problem.

Now stay testing devian 12 (iso by git linuxcnc with uspace) and Procedure CORBETT ethercat installation

at point 3 and point 4.

When start configuration the value the drive and I/O are refreshed after 10 second after start.

On Dmesg have :ETHERCAT WARNING 0-0 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-1 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-2 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-3 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-4 SLAVE DID NOT SYNC AFTER 5000 MS

:ETHERCAT WARNING 0-5 SLAVE DID NOT SYNC AFTER 5000 MS

AFTER THIS TIME THE REFRESH THE VALUE ARE FAST AND NORMAL OPERATION, BUT NOT UNDERSTAND WHY AT START THE LCNC HAVE THIS WAIT FOR READ DRIVE VALUE.

THE SAME CONFIGURATION WITH DEBIAN 10 AND ETHERCAT 1.5.2 AND LCNC 2.9.0-PRE0 RUN OK

Last edit: 04 Apr 2025 11:13 by papagno-source.

Please Log in or Create an account to join the conversation.

- Grotius

-

- Offline

- Platinum Member

-

Less

More

- Posts: 2419

- Thank you received: 2348

11 Apr 2025 22:25 - 11 Apr 2025 22:26 #326124

by Grotius

Replied by Grotius on topic ETHERCAT WAIT SYNC

Hi,

The corbett install uses systemctl to start & stop the ethercat bus.

In debian 12 & 13 i use /etc/init.d/ethercat start.

At what time is your ethercat bus started, at pc boot time?

The corbett install uses systemctl to start & stop the ethercat bus.

In debian 12 & 13 i use /etc/init.d/ethercat start.

At what time is your ethercat bus started, at pc boot time?

Last edit: 11 Apr 2025 22:26 by Grotius.

Please Log in or Create an account to join the conversation.

- Hakan

- Away

- Platinum Member

-

Less

More

- Posts: 1231

- Thank you received: 434

11 Apr 2025 23:06 #326129

by Hakan

Replied by Hakan on topic ETHERCAT WAIT SYNC

Is it the same if you just install the ready apt package with ethercat instead of building ethercat master yourself?

Please Log in or Create an account to join the conversation.

- rodw

-

- Offline

- Platinum Member

-

Less

More

- Posts: 11722

- Thank you received: 3968

12 Apr 2025 05:15 #326145

by rodw

Replied by rodw on topic ETHERCAT WAIT SYNC

I think it would be but the released version might be locked to a tag branch like we do with 2.9.4 vs 2.10Is it the same if you just install the ready apt package with ethercat instead of building ethercat master yourself?

Please Log in or Create an account to join the conversation.

- Hakan

- Away

- Platinum Member

-

Less

More

- Posts: 1231

- Thank you received: 434

12 Apr 2025 07:12 #326150

by Hakan

Replied by Hakan on topic ETHERCAT WAIT SYNC

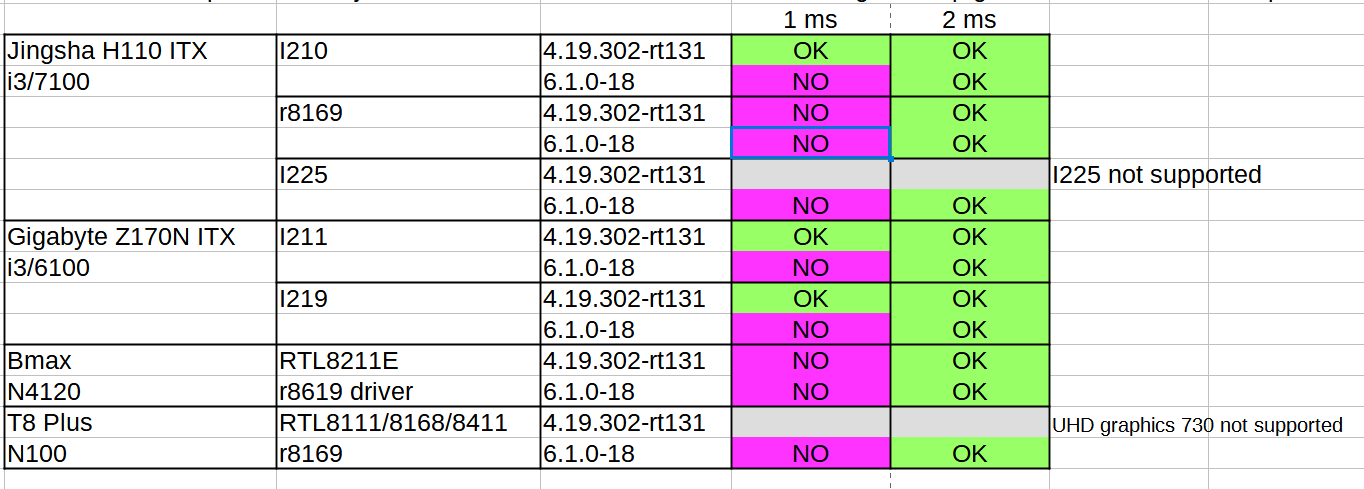

It can be the 4.19 kernel in debian 10 that perform must better than kernel 6.1 in debian 12.

When I was working on my own EtherCAT board a year ago I had some timing issues

and compared the network adapters and linux kernel version in the computers I had around.

linux 4.19 performed better especially with Intel adapters.

Often I had to go up to 2 millisecond servo loop to get acceptable performance.

This is just to show that there are several factors that influence the timing performance

and when you compare with debian 12 and 10, the kernel version is definitely a factor.

The network adapter is another. If you have a Realtek network adapter and have tried

all settings that rodw lay out in his document and you have a free PCI-e slot in the computer

you can try with a cheap Intel network adapter. I210, I225, I226 for example. I have had good luck with them.

Not sure how that comes in here, but you should nevertheless bring down the normal real-time jitter

to single digits, less than +- 10 microseconds, the one you check with latency-histogram.

The WARNING you get, and the delay, is a nuisance more than a real problem. The Ethercat master

will synchronize the time of clients, it just takes longer than 5 seconds. Googling around for this issue

one can see that this issue has been there for more than 10 years and one way to get rid of the warning

is to just increase the 5000 ms level when compiling the ethercat master yourself. You will get the delay though

Here is a short article on DC clock synchronization www.acontis.com/en/dcm.html

When I was working on my own EtherCAT board a year ago I had some timing issues

and compared the network adapters and linux kernel version in the computers I had around.

linux 4.19 performed better especially with Intel adapters.

Often I had to go up to 2 millisecond servo loop to get acceptable performance.

This is just to show that there are several factors that influence the timing performance

and when you compare with debian 12 and 10, the kernel version is definitely a factor.

The network adapter is another. If you have a Realtek network adapter and have tried

all settings that rodw lay out in his document and you have a free PCI-e slot in the computer

you can try with a cheap Intel network adapter. I210, I225, I226 for example. I have had good luck with them.

Not sure how that comes in here, but you should nevertheless bring down the normal real-time jitter

to single digits, less than +- 10 microseconds, the one you check with latency-histogram.

The WARNING you get, and the delay, is a nuisance more than a real problem. The Ethercat master

will synchronize the time of clients, it just takes longer than 5 seconds. Googling around for this issue

one can see that this issue has been there for more than 10 years and one way to get rid of the warning

is to just increase the 5000 ms level when compiling the ethercat master yourself. You will get the delay though

Here is a short article on DC clock synchronization www.acontis.com/en/dcm.html

Attachments:

The following user(s) said Thank You: tommylight

Please Log in or Create an account to join the conversation.

- rodw

-

- Offline

- Platinum Member

-

Less

More

- Posts: 11722

- Thank you received: 3968

12 Apr 2025 10:15 #326156

by rodw

Replied by rodw on topic ETHERCAT WAIT SYNC

These issues have been around for a long time. It is just that we never released a version of Linuxcnc on Debian 11 so we did not know about it. Plus at that time, there was no path to install Ethercat on Debian 11 except for a Deb that Grotius made for me. The PC was running a Realtek R8125 NIC and de driver was first released with Debian 11 as it was a rock and a hard place situation, Eventually I migrated my other PC's to Debian 12 before it was released because of this problem.

At one stage while trying to solve this issue on Debian 12, one of the RT devs indicated it was probably introduced in about kernel version 5.9 and Bullseye was released with kernel 5.10, The other issue is it is mostly to do with the Realtek R8111/R8168 NIC and it is not part of the kernel so nobody cares and if they do, there is a non-free driver (that is not in the non-free-firmware included with Debian 12)

This issue has mainly resulted in Error finishing read on Mesa HM2 connected (Ethernet) cards. I never experienced problems with Ethercat on PC's that had issues with Mesa cards.

Some of the issues are due to us never needing to do cpu affinity settings that were routinely used in the RT world. Then the implementation of new features like energy efficient ethernet also impacted.

Personally, I think the problem is under control if you follow my guidelines. I will say, I think the days of using 2 core PC's are over, although some have got them going with my kernel.I will say my old J1900 has never displayed better performance than it gets on Dsbian 12

However what concerns me is this a new issue you guys have uncovered. I think the correct course is to raise an issue with iGH etherlabmaster on their gitlab repo.

At one stage while trying to solve this issue on Debian 12, one of the RT devs indicated it was probably introduced in about kernel version 5.9 and Bullseye was released with kernel 5.10, The other issue is it is mostly to do with the Realtek R8111/R8168 NIC and it is not part of the kernel so nobody cares and if they do, there is a non-free driver (that is not in the non-free-firmware included with Debian 12)

This issue has mainly resulted in Error finishing read on Mesa HM2 connected (Ethernet) cards. I never experienced problems with Ethercat on PC's that had issues with Mesa cards.

Some of the issues are due to us never needing to do cpu affinity settings that were routinely used in the RT world. Then the implementation of new features like energy efficient ethernet also impacted.

Personally, I think the problem is under control if you follow my guidelines. I will say, I think the days of using 2 core PC's are over, although some have got them going with my kernel.I will say my old J1900 has never displayed better performance than it gets on Dsbian 12

However what concerns me is this a new issue you guys have uncovered. I think the correct course is to raise an issue with iGH etherlabmaster on their gitlab repo.

Please Log in or Create an account to join the conversation.

- dm17ry

-

- Offline

- Elite Member

-

Less

More

- Posts: 194

- Thank you received: 82

12 Apr 2025 14:02 #326165

by dm17ry

Replied by dm17ry on topic ETHERCAT WAIT SYNC

i've been playing lately with ethernet in linuxcnc. came across an interesting reading from intel: eci.intel.com/docs/3.3/development/performance/tsnrefsw.html

i've got an i5 gen11 mobo with i226-V ethernet controllers on it and my home brewed zynq7010 board with PTP-capable gigabit PHYs. working on a bare metal in zynq i have pretty good control over latencies. so i am trying to find ways to tighten linuxcnc's network jitter as much as i can.

intel's product brief mentions that:

Commercial and server versions (LM/IT) support the TSN standards and features and have been designed and validated

accordingly, and maintained as part of its product lifecycle, including AVNU certification. Non-commercial version (V) does not

support TSN; although some low-level features may be partially exposed temporarily, its usage is strongly discouraged

anyways, i patched RTAPI to use CLOCK_TAI, and tried SO_TXTIME thing with an ETF qdisc. without much luck, unfortunately - the TX unit promptly hangs.

TAPRIO qdisc looks much better. seems to be working ok on my i226-V: with trial and error in setting up gates and IRQ affinity tweaking i am now consistently getting around 100ns rx time jitter on zynq with a flood ping running in parallel..

haven't found a proper datasheet on i226, will probably try do buy a i226-LM chip from mouser and solder it in. also will check i210, but it does not support TAPRIO offload. but maybe ETF+SO_TXTIME is working

i've got an i5 gen11 mobo with i226-V ethernet controllers on it and my home brewed zynq7010 board with PTP-capable gigabit PHYs. working on a bare metal in zynq i have pretty good control over latencies. so i am trying to find ways to tighten linuxcnc's network jitter as much as i can.

intel's product brief mentions that:

Commercial and server versions (LM/IT) support the TSN standards and features and have been designed and validated

accordingly, and maintained as part of its product lifecycle, including AVNU certification. Non-commercial version (V) does not

support TSN; although some low-level features may be partially exposed temporarily, its usage is strongly discouraged

anyways, i patched RTAPI to use CLOCK_TAI, and tried SO_TXTIME thing with an ETF qdisc. without much luck, unfortunately - the TX unit promptly hangs.

TAPRIO qdisc looks much better. seems to be working ok on my i226-V: with trial and error in setting up gates and IRQ affinity tweaking i am now consistently getting around 100ns rx time jitter on zynq with a flood ping running in parallel..

haven't found a proper datasheet on i226, will probably try do buy a i226-LM chip from mouser and solder it in. also will check i210, but it does not support TAPRIO offload. but maybe ETF+SO_TXTIME is working

Please Log in or Create an account to join the conversation.

Time to create page: 0.127 seconds